Build and run Clojure with Multi-stage Dockerfile

Deployment of a Clojure service is very simple, requiring only an Uberjar (archive file containing the Clojure Project and Clojure run-time) and the Java Run-time Environment (JRE).

A Clojure service rarely works in isolation and although many services are access via a network connection (defined in Environment Variables), provisioning containers to build and run Clojure along with any other services can be valuable as complexity of the architecture grows.

A Multi-stage Dockerfile is an effective way to build and run Clojure projects in continuous integration pipelines and during local development where multiple services are required for testing.

Docker Hub provides a wide range of images, supporting development, continuous integration and system integration testing.

Multi-stage Dockerfileλ︎

A multi-stage Dockerfile contains builder stage and a run-time stage (usually unnamed). There may also be a common stage used by both bulder and run-time states.

The builder stage should be optimised for building the Clojure project, i.e. caching dependencies and layers, building only layers that change.

The run-time stage should be optimised for running the service efficiently and securely, including only essential files for a minimal size.

The uberjar created by the builder image is copied over to the run-time image to keep that image as clean and small as possible (to minimise resource use).

Example Multi-stage

Dockerfilefor Clojure projects derived from the configuration currently used for commercial and open source work. The example uses make targets, which are Clojure commands defined in the example Makefile

Official Docker imagesλ︎

Docker Hub contains a large variety of images, using those tagged with Docker Official Image is recommended.

Clojure - official Docker Image - provides tools to build Clojure projects (Clojure CLI, Leiningen, Boot)

Eclipse temurin OpenJDK - official Docker image - built by the

community - provides the Java run-time

Ideally a base image should be used where both builder and run-time images share the same ancestor, this helps maintain consistency between build and run-time environments.

The Eclipse OpenJDK image is used by the Clojure docker image, so they implicitly use the same base image without needed to be specified in the project Dockerfile. The Eclipse OpenJDK image could be used as a base image in the Dockerfile but it would mean repeating (and maintaining) much the work done by the official Clojure image)

Alternative Docker images

- CircleCI Convenience Images => Clojure - an optimised Clojure image for use with the CircleCI service

- Amazon Corretto is an alternative version of OpenJDK

Official Docker Image definition

An Official Docker Image means the configuration of that image follows the Docker recommended practices, is well documented and designed for common use cases.

There is no implication as to the correctness of tools, languages or service that image provides, only in the means in which they are provided.

Clojure image as Builder stageλ︎

Practicalli uses the latest Clojure CLI release and the latest Long Term Support (LTS) version of Eclipse Temurin (OpenJDK). Alpine Linux is used to keep the image file size as small as possible, reducing local resource requirements (and image download time).

CLOJURE_VERSION will over-ride the version of Clojure CLI in the Clojure image (which defaults to latest Clojure CLI release). Or choose an image that has a specific Clojure CLI version, e.g. temurin-17-tools-deps-1.11.1.1182-alpine

Builder Image with Clojure CLI version

Create directory for building the project code and set it as the working directory within the Docker container to give RUN commands a path to execute from.

Cache Dependenciesλ︎

Clojure CLI is used to download dependencies for the project and any other tooling used during the build stage, e.g. test runners, packaging tools to create an uberjar. Dependency download should only occur once, unless the deps.edn file changes.

Copy the deps.edn file to the build stage and use the clojure -P prepare (dry run) command to download the dependencies without running any Clojure code or tools.

The dependencies are cached in the Docker overlay (layer) and this cache will be used on successive docker builds unless the deps.edn file is change.

deps.edn in this example contains the project dependencies and :build alias used build the Uberjar.

Build Uberjarλ︎

Copy all the project files to the docker builder working directory, creating another overlay.

Copying the src and other files in a separate overlay to the deps.edn file ensures that changes to the Clojure code or configuration files does not trigger downloading of the dependencies again.

Run the tools.build command to generate an Uberjar.

:build is an alias to include Clojure tools.build dependencies which is used to build the Clojure project into an Uberjar.

Using make task for build

When using make for the build, also copy the Makefile to the builder stage and call the deps target to download the dependencies

Ensure deps target in the Makefile depends on the deps.edn file so the target is skipped if that file has not changed.

dist target to build the Uberjar

Docker Ignore patternsλ︎

.dockerignore file in the root of the project defines file and directory patterns that Docker will ignore with the COPY command. Use .dockerignore to avoid copying files that are not required for the build

Keep the .dockerignore file simple by excluding all files with * pattern and then use the ! character to explicitly add files and directories that should be copied

Docker ignore patterns

Makefile and test-data directories are commonly used by Practicalli, although in general are not widely needed.

OpenJDK for Run-time stageλ︎

The Alpine Linux version of the Eclipse Temurin image is used as it is around 5Mb in size, compared to 60Mb or more of other operating system images.

Run-time containers are often cached in a repository, e.g. AWS Container Repository (ECR). LABEL adds metadata to the container helping it to be identified in a repository or in a local development environment.

Add meta data to the docker configuration

Use

docker inspectto view the metadata

Optionally, add packages to support running the service or helping to debug issue in the container when it is running. For example, add dumb-init to manage processes, curl and jq binaries for manual running of system integration testing scripts for API testing.

apk is the package tool for Alpine Linux and --no-cache option ensures the install file is not part of the resulting image, saving resources. Alpine Linux recommends setting versions to use any point release with the ~= approximately equal version, so any same major.minor version of the package can be used.

Check Alpine packages if new major versions are no longer available (low frequency)

Create Non-root group and user to run service securelyλ︎

Docker runs as root user by default and if a container is compromised the root permissions and could lead to a compromised system. Add a user and group to the run-time image and create a directory to contain service archive, owned by the non-root user. Then instruct docker that all future commands should run as the non-root user

Non-root account creation

Copy Uberjar to run-time stageλ︎

Create a directory to run the service or use a known existing path that will not clash with any existing files from the image.

Set the working directory and copy the uberjar archive file from Builder image

Copy Uberjar to run-time stage

Optionally, add system integration testing scripts to the run-time stage for testing from within the docker container.

Copy test scripts

Set Service Environment variablesλ︎

Define values for environment variables should they be required (usually for debugging), ensuring no sensitive values are used. Environment variables are typically set by the service provisioning the containers (AWS ECS / Kubernettes) or on the local OS host during development (Docker Desktop).

Environment Variables

Optimising the container for Java Virtual Machineλ︎

Clojure Uberjar runs on the Java Virtual Machine which is a highly optimised environment that rarely needs adjusting, unless there are noticeable performance or resource issue use. The most likely option to set is the minimum and maximum heap sizes, i.e. -XX:MinRAMPercentage and -XX:MaxRAMPercentage.

java -XshowSettings -version displays VM settings (vm), Property settings (property), Locale settings (locale), Operating System Metrics (system) and the version of the JVM used. Add the category name to show only a specific group of settings, e.g. java -XshowSettings:system -version.

JDK_JAVA_OPTIONS can be used to tailor the operation of the Java Virtual Machine, although the benefits and constraints of options should be well understood before using them (especially in production).

Example: show system settings on startup, force container mode and set memory heap maximum to 85% of host memory size.

Java Virtual Machine (JVM) options

Relative heap memory settings (

-XX:MaxRAMPercentage) should be used for containers rather than the fixed value options (-Xmx) as the provisioning service for the container may control and change the resources available to a container on deployment (especially a Kubernettes system).

Options that are most relevant to running Clojure & Java Virtual Machine in a container include:

-XshowSettings:systemdisplay (container) system resources on JVM startup-XX:InitialRAMPercentagePercentage of real memory used for initial heap size-XX:MaxRAMPercentageMaximum percentage of real memory used for maximum heap size-XX:MinRAMPercentageMinimum percentage of real memory used for maximum heap size on systems with small physical memory-XX:ActiveProcessorCountspecifies the number of CPU cores the JVM should use regardless of container detection heuristics-XX:±UseContainerSupportforce JVM to run in container mode, disabling container detection (only useful if JVM not detecting container environment)-XX:+UseZGClow latency Z Garbage collector (read the Z Garbage collector documentation and understand the trade-offs before use) - the default Hotspot garbage collector is the most effective choice for most services

Without performance testing of a specific Clojure service and analysis of the results, let the JVM use its own heuristics to determine the most optimum strategies it should use

Provide Access to running Clojure serviceλ︎

If Clojure service listens to network requests when running, then the port it is listening on should be exposed so the world outside the container can communicate to the Clojure service.

e.g. expose port of HTTP Server that runs the Clojure service

Command to run the serviceλ︎

Finally define how to run the Clojure service in the container. The java command is used with the -jar option to run the Clojure service from the Uberjar archive.

The java command will use arguments defined in JDK_JAVA_OPTIONS automatically.

ENTRYPOINT directive defines the command to run the service

Define entrypoint to run the Clojure service

Understanding the Docker image ENTRYPOINT

ENTRYPOINT is the recommended way to run a service in Docker. CMD can be used to pass additional arguments to the ENTRYPOINT command, or used instead of ENTRYPOINT.

jshellis the default ENTRYPOINT for the Eclipse Temurin image, so jshell will run if an ENTRYPOINT of CMD directive is not included in the run-time stage of the Dockerfile.

The ENTRYPOINT command runs as process id 1 (PID 1) inside the docker container. In a Linux system PID 1 should respond to all TERM and SIGTERM signals.

dump-init provides a simple process supervisor and init system, designed to run as PID 1 and manage all signals and child processes effectively.

Use dumb-init as the ENTRYPOINT command and CMD to pass the java command to start the Clojure service as an argument. dumb-init ensures TERM signals are sent to the Java process and all child processes are cleaned up on shutdown.

Alternatively, run dumb-jump and java within the

ENTRYPOINTdirective,ENTRYPOINT ["/usr/bin/dumb-init", "--", "java", "-jar", "/service/practicalli-service.jar"]

Build and Run with dockerλ︎

Ensure docker services are running, i.e. start docker desktop.

Build the service and create an image to run the Clojure service in a container with docker build. Use a --tag to help identify the image and specify the context (in this example the root directory of the current project, .)

After the first time building the docker image, any parts of the build that havent changed will use their respective cached layers in the builder stage. This can lead to very fast, even zero time builds.

Maximising the docker cache by careful consideration of command order and design in a

Dockerfilecan have a significant positive affect on build speed. Each command is effectively a layer in the Docker image and if its respective files have not changed, then the cached version of the command will be run

Run the built image in a docker container using docker run, publishing the port number so it can be used from the host (developer environment or deployed environment). Use the name of the image created by the tag in the docker build command.

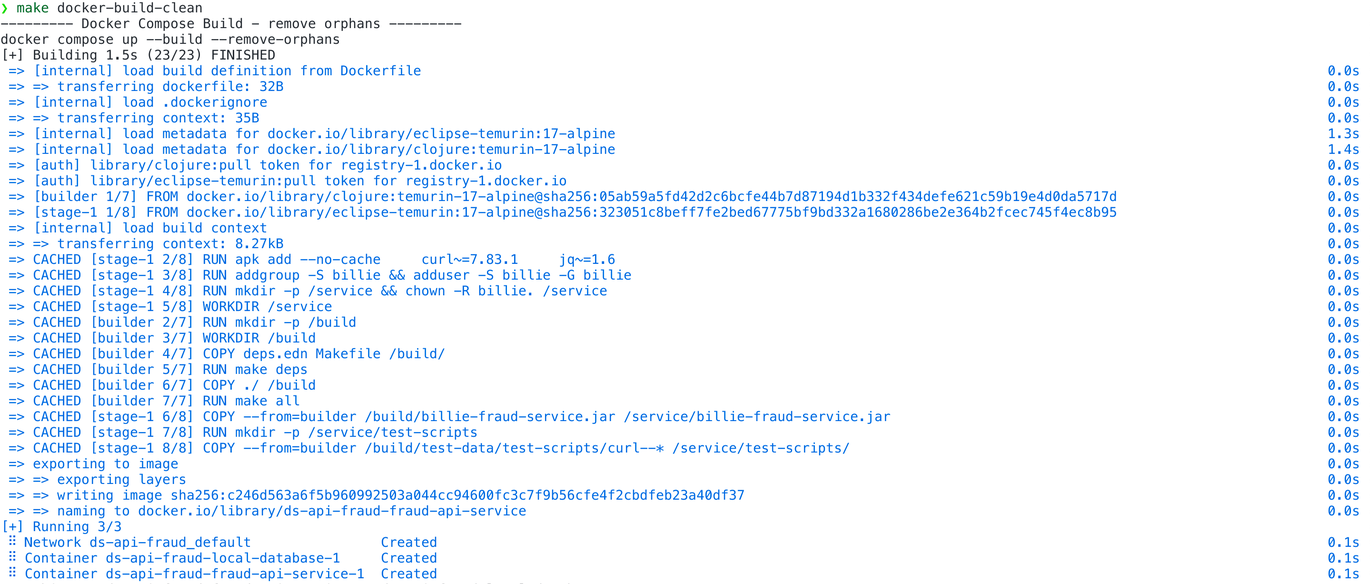

Docker Compose to orchestrate services locally

Consider creating a Docker compose.yml file that defines all the services that should be run to support local development

run docker compose up to start all the services, including pauses for heath checks where service startup depends on other services.

Summaryλ︎

A Multi-stage Dockerfile is an effective way of building and running Clojure projects, especially as the architecture grows in complexity.

Organising the commands in the Dockerfile to maximise the use of docker cache will speed up the build time by skipping tasks that would not change the resulting image.

Consider creating a docker-compose.yaml file to orchestrate services that are required for development of the project and local system integration testing.

Thank you.